Brian Wansink enjoyed years as one of the most renowned food researchers in the U.S., writing best-selling books, publishing hundreds of studies, and even serving at the U.S. Department of Agriculture, where he helped shape the nation’s dietary guidelines

His research into eating habits and their effects on health, such as the connection between larger plate sizes and overeating, gained widespread acceptance. But on September 20, 2018, Cornell University announced Wansink’s resignation after finding that he had committed “academic misconduct in his research and scholarship, including misreporting of research data.”

Wansink’s case received a lot of media attention – but almost entirely due to the prominent position he had achieved, not because his misreporting was in any way unique. Similar instances of faulty data manipulation are widespread in academic circles. The process generally involves collecting and selecting data for statistical analyses until nonsignificant results become significant. Researchers under pressure to publish often misinterpret data – whether intentionally or not – to produce desired conclusions.

The dangers of overfitting in insurance

The insurance industry has a lot to learn from academia’s missteps. With predictive analytics and data science assuming ever-expanding roles in insurance risk modeling, carriers would be well-served to establish practices that mitigate the creation of faulty models. Overfitting, the process of deriving overly optimistic model results based on particular characteristics of a given sample, is of particular concern for insurers. Overfitted models pick up more of the noise in a dataset instead of the actual underlying pattern that exists in the real world, thus failing to provide accurate predictions or useful insights.

Generally, overfitting occurs due to analyst oversight in two key areas:

- Researcher degrees of freedom (also known as procedural overfitting, data dredging, p-hacking, etc.)

- Asking too much from the data (model complexity)

See also: Predictive Models on Conversion Studies for Level Premium Term Plans

Researcher degrees of freedom

The concept of researcher degrees of freedom refers to the many choices available to data analysts that produce results which cannot be replicated. Prominent cases such as Brian Wansink’s are bringing more attention to the replication crisis affecting a range of disciplines, including food science, psychology, cancer research and many more.

The ability to replicate previous results is a critical component of the scientific process, so why do so many published findings fail to replicate? A variety of factors can contribute to drawing statistical significance from insignificant data: the means of combining and comparing conditions, excluding data in arbitrary ways, controlling for different predictors, etc.

See also: Predictive Modeling - A Life Underwriter's Primer

With all the choices to be made in a given analysis, employing proven strategies can help produce sound results that are more likely to be reproduced in the future:

1. Make research design decisions before analyzing the data

This starts with identifying a clear question to answer or problem to solve. Outlining data subsets to focus on, potential predictors of interest, and methods to use in selecting a model then follows. While it may be impossible to anticipate all issues that can arise unexpectedly in the data, the more decisions made at the outset, the less likely the chances of making arbitrary choices based on observations

2. Where applicable, use subject matter knowledge to inform data aggregation

The process of selecting, grouping, and comparing data need not start from scratch. Relying on previous research and industry best practices regarding data manipulation to inform decisions can help produce replicable results.

3. Limit the exclusion of data

While identifying and removing data entry errors is clearly important, this should only happen with absolute certainty that such data points are erroneous. Spending the time to develop a true understand the data generating process can help limit faulty exclusions.

Asking too much from the data

Sometimes, simple models outperform very complex ones in predictive analytics. For any given dataset, the number of observations available allow for a maximum level of model complexity above which no acceptable degree of prediction certainty is achievable. When a simpler model produces improved predictions over a more complex model, overfitting has likely occurred.

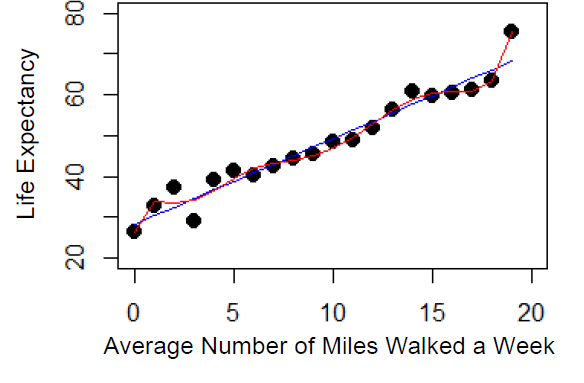

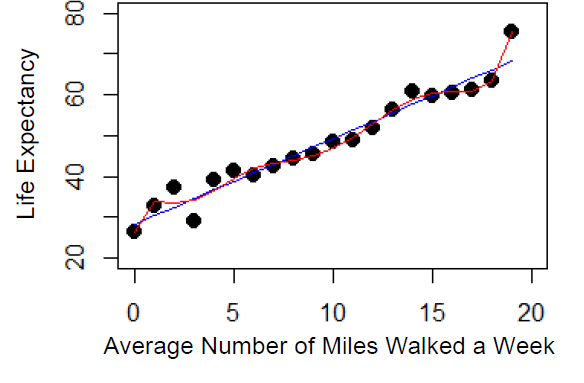

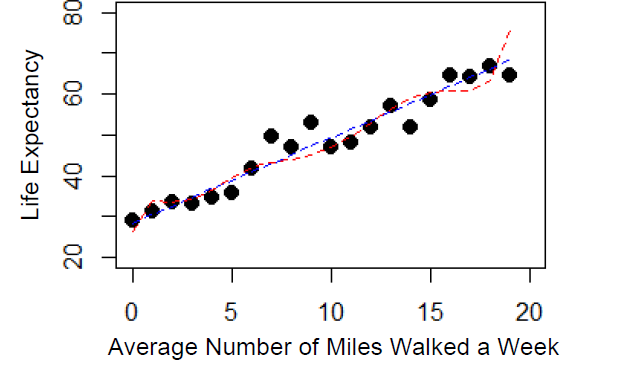

In the simulated example below, 20 data points are drawn from the same distribution. The x-axis represents the average number of miles walked a week, and the y-axis represents life expectancy. Two models that relate life expectancy as a function of the weekly number of miles walked are plotted in Figure 1. A simple model (Y = β0 + β1X + ε) estimated on the 20 data points is represented by the blue line, while a more complex model (Y = β0 + Y = β0 + β1X + β2X2 +…+β8X8 + ε) estimated on the same points is represented with a red line.

Figure 1: Simulated Data

Even a quick look clearly indicates that the complex model does a better job of fitting the sample dataset, which indeed it does. (The simple model has a mean squared error [MSE] of 8.45 compared to the complex model which has an MSE of 3.27.) However, this example uses the same dataset that was used to build the models – a practice that is highly likely to overestimate performance.

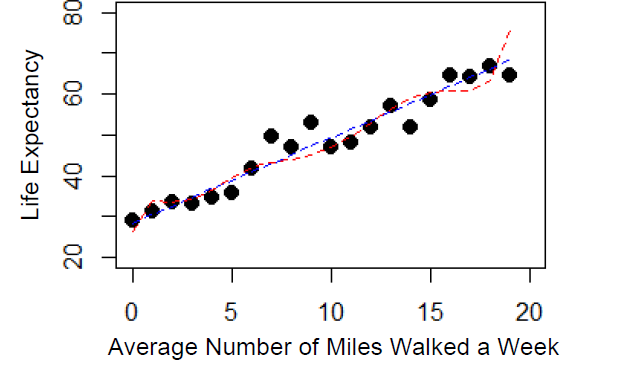

Figure 2 shows both models estimated once more on a new set of 20 data points generated from the same distribution to measure the out-of-sample performance of these models.

Figure 2: New Simulated Data

Looking at both models on the new dataset leads to a different conclusion. The complex model no longer appears to predict the data points as well as it did on the previous set, which indeed it does not. (The simple model’s MSE is 8.86 compared to the complex model’s MSE of 17.76.)

This example shows why it is essential to select candidate models based on out-of-sample performance, and not using the same dataset that was used to build the models. As a substitute for new data, certain statistical techniques can also be used to estimate the out-of-sample performance of models without the need to gather more data, e.g. cross-validation, AIC/BIC1, and bootstrapping. Remember: the goal is to determine which model gets us closer to learning about future outcomes, not historical data.

See also: Predictive Modeling: Is It a Game Changer?

The Key: Vigilance in Validation

Ever-increasing computing power allows analysts to manipulate data and build models from both small and large datasets more quickly and more effectively than ever before. Yet these enhanced capabilities also come with a greater number of choices and greater exposure to building models that overfit the data. Tasked with determining an applicant’s mortality risk years into the future, life insurers must be acutely aware of the potential pitfalls of overfitting, take steps to accurately validate every predictive model they develop, and work continuously to update and improve models as new forms of data become available.

Click here to view a presentation on this subject from the 2018 SOA Predictive Analytics Symposium.